China’s Supercomputer: What’s the Next Big Step?

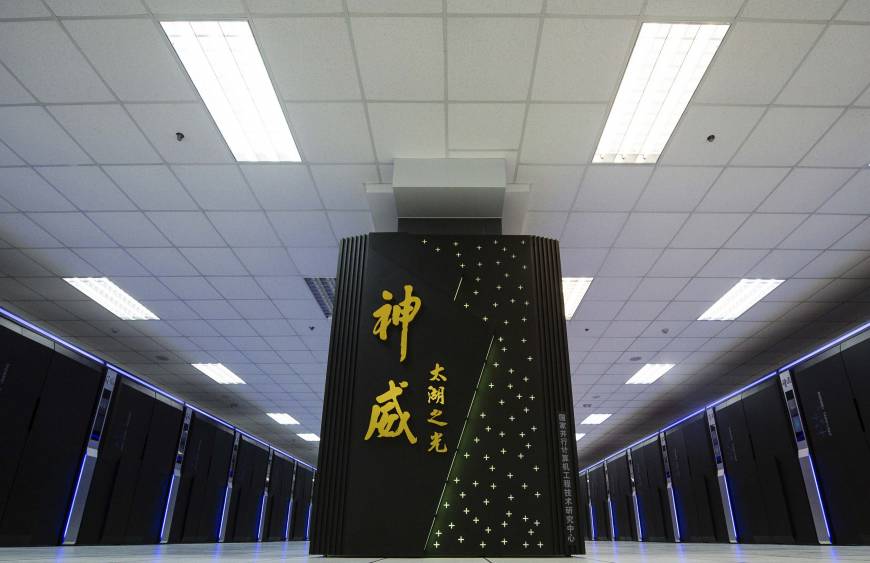

Even though technology has advanced and many developments have been made in the world of computing, the idea of a supercomputer which can perform millions of calculations per second has been part of science fiction. That is, up until now as China announced that it has built a supercomputer called the Sunway TaihuLight which is capable of performing not only millions but quadrillions of calculations per second.

The unveiling of the TaihuLight makes a strong statement that China is not just an emerging superpower but has become a major player in the world of technology. For starters, all the parts that the Chinese used to build their supercomputer are all locally made, meaning they did not depend on US technology nor on other nations with more sophisticated technology.

Jack Dongarra, the professor from the University of Tennessee who also created the measurement system used to rate supercomputers, said that the Chinese supercomputer is “not based on existing architecture, but a system that has Chinese processors.”

So, how powerful is this supercomputer?

Say What – Petaflops?!?

The TaihuLight has 10.65 million cores making it 20 times faster than its American counterpart which only has 560,000 cores. Thanks to its 260 cores and 41,000 chips, the Chinese supercomputer can process petaflops of data per second or, to be specific, 93 quadrillions of data per second. Despite its power, the TaihuLight uses only 1.3 petabytes and 15.3 megawatts of energy for the entire machine – all these using the first -generation of China – made ShenWei chips.

The TaihuLight was developed and funded under the 863 program, which focuses on developing Chinese technology and altogether stop the country’s reliance on Western technology.

The Chinese have to thank the United States for issuing a ban on the sale of US-made Intel processors for fear that the Chinese will use them on projects that will act against US foreign interests and national security.

The US has a valid reason for such alarm since China used the first supercomputer they built, the Tianhe – 1A, to use in “nuclear explosive activities.” The creators of TaihuLight were quick to dispel any similar speculations regarding the new supercomputer saying that it will be used in earth science, manufacturing, and earth system modeling.

From PetaFlops to Exaflops

Professor Qian, the chief scientist of the 863 program, said that China has put its 13th five-year plan into motion which aims to build the most ambitious exascale program which will spawn exaflop computers by 2020.

For those who are not aware how fast an exaflop is, it is equals to a “billion billion calculations per second.” To put it more specifically, one exaflop is a thousand petaflops.

Aside from exascale computers, the plan also aims to have an HPC (high-performance computing) technology set that is fully controlled by China and to promote computer industry by technology transfer. In order to achieve this, China is investing heavily in research that focuses on silicon photonics, new high-performance technology with 3D chip packaging, and on-chip networks.

Professor Qian described that the exascale prototype will have around “512 nodes, offering 5-10 teraflops-per-node, 10-20 Gflops per watt, point to point bandwidth greater than 200 Gbps. MPI latency should be less than 1.5 us.”

Introducing the Argo and the Chameleon

With China’s aggressive and ambitious plans to build more powerful supercomputers in the near future, the United States is not one to sit by and let its competitors get ahead.

Last year, President Obama issued an executive order which launched the National Strategic Computing Initiative or NSCI, a project which is aimed at researching, developing, and deploying HPC technology. NSCI is a joint project among the Department of Energy (DOE), Department of Defense, and the National Science Foundation.

One of the project’s goals is to build an exascale computing system, which can give the United States an economic security, and research advantage.

In order to achieve that, the DOE funded the Argo Project, a three-year collaboration between 40 researchers from four universities and three laboratories to create a prototype exascale computer which has a fully operational software.

The team is using Chameleon, a testbed which allows researchers to experiment with new and emerging cloud computing architecture as well as pursue new applications in cloud computing.

“Cloud computing has become a dominant method of providing computing infrastructure for Internet services. But to design new and innovative compute clouds and the applications they will run, academic researchers need much greater control, diversity and visibility into the hardware and software infrastructure than is available with commercial cloud systems today,” said Jack Brassil, a program officer in NSF’s Division of Computer and Network Systems.